cloud solutions

cloud PARTNERSHIP

get in touch

cloud solutions

cloud PARTNERSHIP

cloud security

managed platforms

Managed Platforms

professional services

resources

about

Back

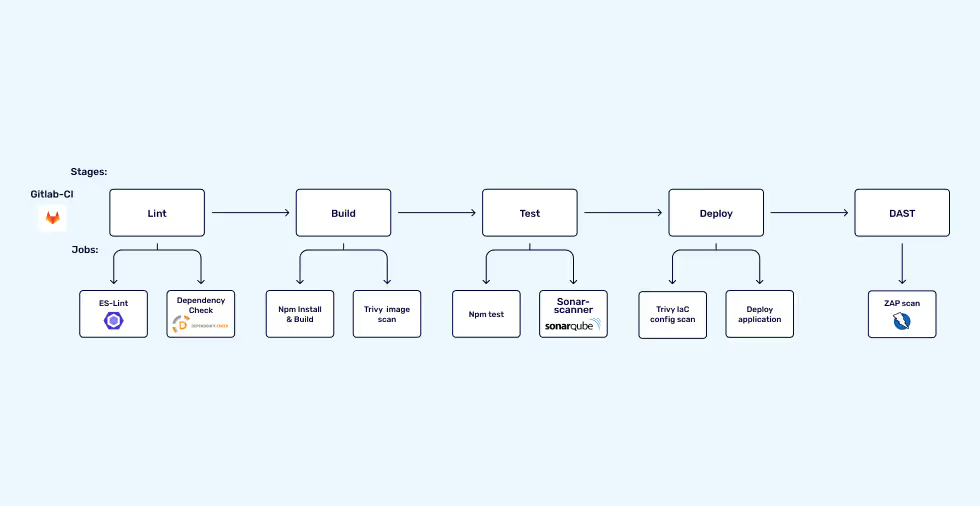

When integrating security tests, you either perform static analysis or dynamic analysis. Static analysis typically involves software composition analysis, secrets detection, identifying coding flaws and security misconfigurations, while dynamic analysis will involve vulnerability scans of the web application. Static analysis can occur before or after a project has been built using its relative build commands, while dynamic analysis occurs after the deploy stage.

In some cases, especially with containerized applications, you can perform dynamic analysis in the pipeline or a dedicated environment for dynamic analysis in situations where there are multiple service dependencies, before deploying the application to production.

Static and Dynamic Analysis breakdown Static and Dynamic Analysis breakdown

In Part 1, I introduced some considerations when selecting security tools in CI pipelines; this will be an important factor when designing your security testing workflow.

A security testing workflow should cover both the software and infrastructure aspects of security. The diagram below distinguishes different types of security testing activities according to what aspect they cover.

To design a security testing workflow for a CI pipeline, I will use an example organisation to go over some considerations and make the best decisions for the security workflow to be implemented.

XYZ Org Example

XYZ org wants to implement security testing for their software applications; this includes a mix of backend API services and frontend apps, all written in Node.js. Their projects make use of Gitlab SCM, and the company has provided the following requirements:

In addition to the security testing requirements, XYZ org would like to keep the pipeline runtime as fast as possible to avoid affecting the speed of pushing frequent changes to production.

Finally, to comply with internal and external security policies, XYZ org would like to fix all Critical and High vulnerabilities before deploying changes to production while adding other issues to their backlog.

Let us look at two considerations that will be useful in meeting XYZ org’s requirements:

Some drawbacks of using this approach are:

DAG Pipeline: DAG pipelines work by using the ‘needs’ declaration. This means that certain jobs in the next stages can run without waiting for all the jobs in the current stage to finish. This could speed up the development process if the job relationships are defined properly.

In the workflow above, the jobs needed to build and deploy the application are defined with the ‘needs’ declaration. This means that the time it takes to deploy the application will remain the same while the security jobs also run. This method is suitable for deployments to development or staging environments where you don’t want security checks to impact the process of developers implementing and testing their changes. The main drawback of this workflow is that implementing quality gating for security vulnerabilities will not be as effective in the basic pipeline model.

Other types of GitLab pipelines exist like the Parent-Child pipelines, Merge Request pipelines and Merged Result pipelines which can be useful in deciding how you want to implement security testing. You can also use tag and schedule pipelines to decide on when you want to run security tests.

When deploying to multiple environments, using the right deployment strategy is very important. For example, if XYZ org maintains three deployment environments (dev, staging and production), they could use tag pipelines for software releases and implement the DAST stage in tag pipelines.

The use of job controls (rules, only and except) can be used to indicate when certain jobs should run. For example, XYZ org can decide to only run dependency analysis jobs when changes are made to package manifests during commits or merge requests.

A scheduled job can also be used for dependency analysis and image scanning to identify new CVEs that have been disclosed.

Some things to note when implementing security testing:

There are two major ways to address security issues discovered in a CI pipeline:

Depending on your pipeline strategy, you can use any of the above methods to address security issues.

I will use the following contexts to explain further:

Context A:

Context B

XYZ org’s decision will also influence their tool selection. In context A, platform-less or dockerized tools that can run seamlessly without the need for any API integration platform subscription will be prioritized. Open source and internally developed tools will be the go-to selection for security testing implementation. The ability to see scan results either as pipeline artefacts or on job outputs will also be essential for addressing security vulnerabilities.

Some examples of tools to consider in this context are:

In Context B, A criterion for tool selection is the ability to visualize scan results on a dashboard or platform. XYZ org would go for more complex open-source tools or commercial products.

Some examples of tools to be considered will include:

Some other factors to consider in implementing a security testing strategy are:

Share Article: